If you’re diving into the world of data, understanding data engineering is a must. In this data engineering basics guide, we’ll explore what data engineering is, why it matters, the tools you need, and how to start building your skills. Don’t worry—we’ll keep it simple and conversational so that even if you’re a complete beginner, you’ll get it.

What is Data Engineering?

Data engineering is the process of designing, building, and maintaining systems that allow organizations to collect, store, and analyze data efficiently. Think of it as constructing the foundation and pipelines that ensure data flows smoothly from its source to the people who need it—analysts, data scientists, and decision-makers.

While data scientists focus on analyzing data to gain insights, data engineers focus on building the infrastructure that makes that analysis possible. In other words, data engineers create the roads, bridges, and tunnels that allow data to travel safely and reliably.

Why is Data Engineering Important?

In today’s data-driven world, businesses generate massive amounts of data every second. Without proper data pipelines and infrastructure:

- Data can become inconsistent or inaccurate.

- Analysts and data scientists may waste time cleaning and organizing data instead of analyzing it.

- Decision-making can become slower and less reliable.

This is where data engineers come in—they make sure data is clean, reliable, and accessible, allowing businesses to make faster, smarter decisions.

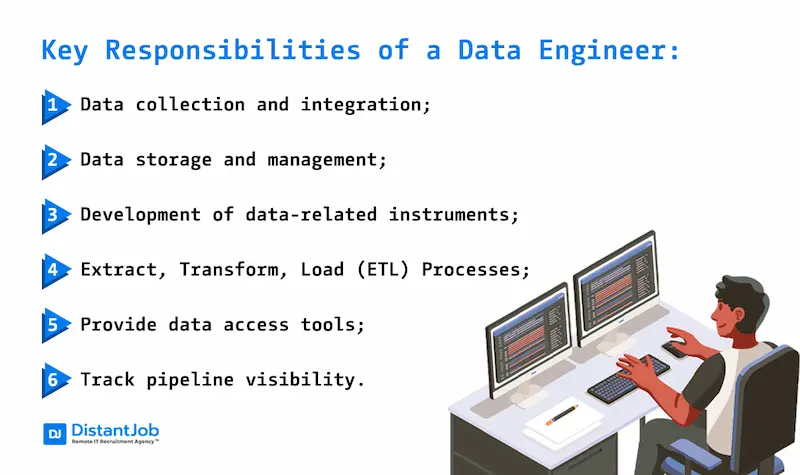

Key Responsibilities of a Data Engineer

Here’s a detailed look at what data engineers actually do on the job:

1. Data Collection

Data comes from various sources, such as:

- Internal databases

- External APIs

- Social media platforms

- IoT devices and sensors

Data engineers gather this data efficiently so it can be processed and stored.

2. Data Cleaning

Raw data is rarely perfect. Data engineers:

- Remove duplicates

- Handle missing values

- Standardize formats

- Ensure data accuracy

This makes the data analysis-ready, saving analysts countless hours.

3. Data Storage

Data needs to be stored securely and efficiently. Data engineers design:

- Databases (SQL, NoSQL) for structured and semi-structured data

- Data warehouses (Redshift, Snowflake, BigQuery) for analytical workloads

- Data lakes (S3, HDFS) for large-scale unstructured data

4. Data Transformation

Raw data often isn’t in the right format. Engineers use ETL (Extract, Transform, Load) pipelines to:

- Transform data into a consistent structure

- Merge data from multiple sources

- Make it easy to query and analyze

5. Maintaining Data Infrastructure

Data pipelines and systems must run continuously and efficiently. Data engineers monitor systems, optimize performance, and ensure scalability as data volume grows.

Core Concepts You Should Know

To truly understand data engineering, there are a few core concepts you need to be familiar with:

ETL (Extract, Transform, Load)

ETL is the backbone of data engineering:

- Extract: Pull data from multiple sources

- Transform: Clean and reshape data

- Load: Save it in a warehouse or database

ETL pipelines are essential for providing clean and structured data for analysis.

Data Modeling

Data engineers design logical structures for data storage. Good data modeling ensures:

- Data is easy to query

- Storage is efficient

- Relationships between data points are clear

Big Data

With huge volumes of data, traditional databases can struggle. Big data tools like Hadoop, Spark, and Kafka allow engineers to process large datasets efficiently and in real-time.

Cloud Platforms

Most modern data infrastructure runs on the cloud. Engineers often use services like:

- AWS (Redshift, S3, Glue)

- Google Cloud (BigQuery, Dataflow, Cloud Storage)

- Microsoft Azure (Azure Data Lake, Synapse Analytics)

You may also like to read this:

AI in Healthcare Explained: Benefits, Uses & Future

Top AI In Business Tips To Automate, Analyze And Grow Faster

Practical AI Project Ideas For Beginners And Experts

AI Tools For Beginners: Easy Ways To Get Started

Modern AI Application Guide | Explore Top AI Uses

Tools and Technologies Every Data Engineer Should Know

Here’s a more detailed list of essential tools:

Databases

- SQL Databases: MySQL, PostgreSQL, Oracle

- NoSQL Databases: MongoDB, Cassandra

Data Warehouses

- Amazon Redshift

- Google BigQuery

- Snowflake

ETL Tools

- Apache Airflow

- Talend

- Informatica

- dbt (Data Build Tool)

Big Data Tools

- Apache Hadoop

- Apache Spark

- Apache Kafka

Programming Languages

- Python: Most common for scripting ETL pipelines

- SQL: Essential for querying databases

- Scala/Java: Sometimes used in big data frameworks

Data Engineering vs Data Science

It’s easy to confuse these roles, but they are complementary:

| Aspect | Data Engineer | Data Scientist |

| Focus | Building and maintaining data infrastructure | Analyzing data and building models |

| Tools | SQL, Python, Spark, ETL tools | Python, R, ML libraries, Tableau |

| Goal | Make data accessible and reliable | Generate insights and predictions |

In short: Data engineers prepare the data, data scientists use the data.

How to Get Started in Data Engineering

If you’re ready to dive in, here’s a beginner-friendly roadmap:

- Learn SQL: Understand database queries, joins, and aggregations.

- Learn a Programming Language: Python is ideal for ETL and automation.

- Understand ETL Pipelines: Practice building simple pipelines to move and clean data.

- Learn About Big Data Tools: Explore Spark, Hadoop, and Kafka for large-scale data.

- Explore Cloud Platforms: AWS, GCP, and Azure are widely used in the industry.

- Work on Projects: Build sample pipelines, data warehouses, or dashboards to get hands-on experience.

Real-World Applications of Data Engineering

Data engineering is everywhere:

- E-commerce: Track customer behavior and inventory.

- Finance: Process transactions and detect fraud.

- Healthcare: Manage patient records and research data.

- Streaming Services: Recommend content using user behavior data.

Without robust data engineering, these industries couldn’t make real-time, data-driven decisions.

Final Thoughts

Data engineering is a dynamic and essential field in today’s tech-driven world. With the right skills, tools, and mindset, you can build efficient data systems that empower organizations to make smarter decisions.

By following this data engineering basics guide, you now understand what data engineering is, the tools involved, and how to start your journey. Remember, it’s not just about technology—it’s about making data useful, accessible, and reliable.

With curiosity, patience, and practice, you can become a skilled data engineer and unlock the power of data for your career and future projects.

FAQs

1. What is data engineering?

Data engineering is designing, building, and maintaining systems that collect, store, and process data efficiently for analysis and decision-making.

2. How is it different from data science?

Data engineers build data infrastructure and pipelines; data scientists analyze the data and extract insights.

3. What skills are needed?

SQL, Python (or Java/Scala), ETL, data modeling, big data tools (Hadoop, Spark), and cloud platforms (AWS, GCP, Azure).

4. What tools do data engineers use?

Databases: MySQL, PostgreSQL, MongoDB

Data Warehouses: Redshift, BigQuery, Snowflake

ETL: Airflow, Talend, dbt

Big Data: Spark, Hadoop, Kafka

Cloud: AWS, GCP, Azure

5. What is ETL?

ETL (Extract, Transform, Load) is the process of collecting data, cleaning/organizing it, and loading it into storage for analysis.